May 11, 2022

Linkedin, Digital Health and Ethics

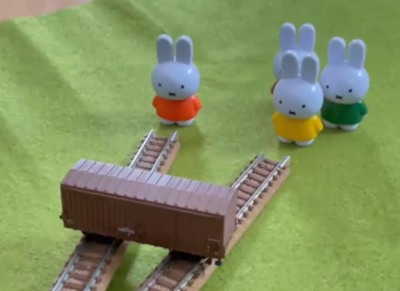

The development of self driving cars has shine a bright light on the tramway challenge, which has reached meme status.

The digital health revolution is no different and has created gazillions of ethical challenges. You can call that a goldmine of ethic questions, if you like your glass half-full or are a professional ethicist. Or you can call it a minefield, if you are an entrepreneur in health tech, or an investor backing such a venture. Ethics-wise, healthtech does not play in the same league as another regulated industry, miltech. The concepts of risk and benefits are used in both leagues, but with meanings that are not compatible. Health tech does not play either in the same league as “plain” tech (aka YC). The concepts of risk and benefits most of the time share now compatible definitions, i.e. use roughly the same axes to describe their space of operations. But still, the benchmarks and values measured alongside these axes differ widely.

Take Linkedin as an example. The T&C’s imposed to users not to use any form of automated tool to interact with their app/website/api/whatever. The sanction for doing so, after a couple of warnings, is a ban from the service, with what appears to be no possible recourse. And, as I know firsthand, Linkedin threatens innocent users under this accusation without any evidence or specific facts being shared.

Whilst extremely frustrated, I’m still using Linkedin. And, whilst there are (I assume) a very large numbers of frustrated and/or banned users, none make a scandal of it. It’s a fair game. Linkedin is a communication service, where the deal is that I use the service for free, but they are in control of their T&Cs and what I post is theirs to control. Highly unbalanced etc - but until someone comes up with a better solution, I’ll take what’s available.

I will not speculate beyond reason on the intent behind these T&Cs, but here again - I live with it. Whereas I would prefer being able for instance to script something to filter my stream of posts and cut the adverts and the things that I don’t want, or to automatically create a Linkedin post with a blurb and link to the blog post each time I post a new one. I understand that this would be not profitable for the owners of the service.

Now imagine the same example for a digital health application. For a user, being able to automate the writing or reading of data to/from a service/product can literally mean a level of magnitude improvement in managing the risks associated with a serious chronic disease. The Pioneers of Self Tech are a great illustration for this. They are spearheading the #WeAreNotWaiting movement, bypassing and/or preceding commercial manufacturers with Software applications to read/write from/to their devices/services.

Would T&Cs similar to Linkedin’s be ethically acceptable in that context? In other words, could manufacturers (e.g. of blood glucose monitors) be ethical and actively prevent their users to access their data with an automated tool, and even deny to them the use of their device/service if the users were attempting such access?